KBI Marketing & WebTargetedTraffic have been executing site audits for many years, and sometimes our clients tell us how frustrating it can be to come across the same common technical SEO issues over and over again.

This post outlines the most common technical SEO problems that seoClarity has encountered while doing hundreds of site audits over the years. Hopefully, our solutions help you when you come across these SEO problems on your site.

Table of Contents

What is Technical SEO?

Technical SEO is the part that happens behind-the-scenes. The set coordinator of your SEO strategy, if you will.

Put simply, it’s the technical actions taken to improve a site’s rankings in the search results. It covers the nitty-gritty aspects of SEO like crawling, indexing, site structure, migrations, page speed, and so on.

And yes, it can get a bit complicated.

But if you’re at all concerned with how your site is ranking (and if you’re here, you probably are) it’s something that simply can’t be overlooked.

To prevent the loss of customers and sustain business growth, businesses need to identify and fix the common on-site technical SEO problems with their websites.

Here are the most common on-site technical SEO issues you’re likely facing and how to solve them:

1) Website Speed

What’s Wrong?

The rank of your website on SERPs depends a lot on site speed. The faster a site is, the better the user experience, so slower websites are penalized which causes them to slide down the rankings. Google reduces the number of crawlers sent to your website if the server response time is more than 2 seconds. This, in turn, means fewer pages get indexed!

Slow page load speed can hurt your rankings

To give you a resource on what speed you should be aiming for, SEMrush compiled the results from a study into the following:

- if your site loads in 5 seconds, it is faster than approximately 25% of the web

- if your site loads in 2.9 seconds, it is faster than approximately 50% of the web

- if your site loads in 1.7 seconds, it is faster than approximately 75% of the web

- if your site loads in 0.8 seconds, it is faster than approximately 94% of the web

The Solution:

One word – Google PageSpeed Insights. This tool tracks and measures the performance of your website for both desktop and mobile versions. Alerts are sent out for pages that are not correctly optimized. The best part is, PageSpeed Insights offers actionable descriptions on how to rectify the problem.

WordPress users can also ask their hosting providers for help. It is recommended to choose a good WordPress hosting service that is tested for their uptime and speed (check out this list of 10 Best WordPress Hosting Services). Other than that, optimizing the images on your web page, fixing leverage browser caching, and minifying CSS and JavaScript may also yield positive results, as far as the speed of your website is concerned.

Some ways to increase site speed include:

- Enabling compression – You’ll have to talk to your web development team about this. It’s not something you should attempt on your own as it usually involves updating your web server configuration. However, it will improve your site speed.

- Optimizing images – Many sites have images that are 500k or more in size. Some of those pics could be optimized so that they’re much smaller without sacrificing image quality. When your site has fewer bytes to load, it will render the page faster.

- Leveraging browser caching – If you’re using WordPress, you can grab a plugin that will enable you to use browser caching. That helps users who revisit your site because they’ll load resources (like images) from their hard drive instead of over the network.

- Using a CDN – A content delivery network (CDN) will deliver content quickly to your visitors by loading it from a node that’s close to their location. The downside is the cost. CDNs can be expensive. But if you’re concerned about user experience, they might be worth it.

2) Low Text-to-HTML Ratio

What’s Wrong?

This occurs when there’s more backend code on a site then text that people can read, and can cause sites to load slowly.

Often caused by a poorly coded site, it can be solved by removing unneeded code or adding more on-page text.

Low ratios could indicate:

- Slow loading websites, because of dirty and excessive code

- Hidden texts, which are a red flag for search bots

- Excessive Flash, inline styling, and Javascript

The Solution:

JavaScript is great programming language, but if you don’t know what you’re doing, it can wind up slowing down your site. To rectify this, add relevant on-page text where necessary, move inline scripts to separate files, and remove unnecessary code.

3) Broken Links

What’s Wrong?

Not all SEO issues are easy to spot, and this one definitely falls under that categorty.

While a broken link here or there is often inevitable, too many broken links spell SEO trouble.

No one wants to continuously search a site full of 404 errors, so it will likely result in a higher bounce right and traffic down.

And both of those things? Are bad for SEO. Internal links not only improve your SEO, but they’re also the easiest links to add because they’re part of your own web property.

If your website has hundreds of pages, one or two broken links are commonplace and hardly an issue. However, dozens of broken links are a huge blow, because:

- The user’s quality perception of your website deteriorates.

- Broken links can take your crawl budget for a toss. When search bots find too many broken links, they divert to other websites, which leaves your site’s important pages un-crawled and un-indexed.

- Your website’s page authority is also negatively impacted.

The Solution:

Access the Google Search Console and click on the “Crawl Errors” option under “Crawl” to understand which of your pages are returning 404 responses. Any 404 errors should be fixed early so they do not trouble your visitors and route them to other parts of your website – or off your site all together! – instead.

4) Errors in Language Declarations

What’s Wrong?

For websites with global audiences, language declarations become important, so that the search engine is able to detect the language. Especially for text-to-speech conversion, this helps enhance the user experience because translators are able to read the content in the correct dialect of the native language. Plus, there are inherent international SEO and geo-location benefits.

Ideally, you want your content delivered to the right audience – which also means those that speak your language.

Failing to declare the default language of your site will hurt your page’s ability to translate as well as your location and international SEO.

Make sure you use this list to declare your language correctly.

The Solution:

To declare region specificity of your web page, use rel=”alternate” hreflang tag.

To the <head> section of http://sample.com/uk, add a link element pointing to the French version of the page at http://example.com.uk-fr, in this manner:

<link rel=”alternate” hreflang=”fr” href=” http://example.com/uk-fr” />

One of the common mistakes made by websites is to use wrong language codes; refer to this HTML Language Code Reference list to choose the the correct code. Remember, language declaration is an important aspect of web page relevance score, which is itself important for SEO.

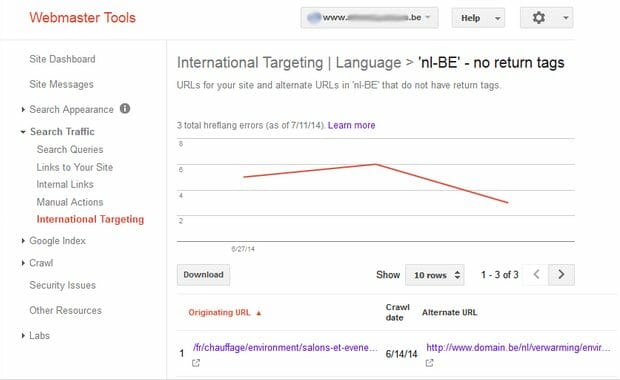

Another mistake is that of return tag errors: these result from hreflang annotations that fail to cross reference each other. Use Google Search Console > International Targeting to identify these errors. These annotations need to be confirmed from other pages; that is, if page A links to B, then B must link back to A.

5) Duplicate Content

What’s Wrong?

Almost all of the SEO professionals we queried cited duplicate content as a top technical concern. Simply put, duplicate content is any content that is “appreciably similar” or exactly the same as content that resides on your site, according to Google Webmaster Tools. Make sure your website does not fall in that category. Why? Not only does copied content affect your rankings, but Google may penalize your website, too. In fact, your site may lose the chance to rank on SERPs altogether.

The Solution:

International sites that target multiple countries with content in a variety of languages can also end up with a lot of duplicate content, according to Matt Naeger, executive vice president, digital strategy for Merkle. In this scenario, Naeger recommends using the rel=”alternate” hreflang code within the <head> of every page to identify the geolocation of the content in a similar, but more targeted language. Using IP detection to generate the correct language and default currency for a page is another solution.

A common duplicate content issue occurs when one site has either a URL beginning with “www” or a URL that doesn’t contain “www,” according to Ramon Khan, online marketing director, National Air Warehouse. Thankfully, there’s an easy fix.

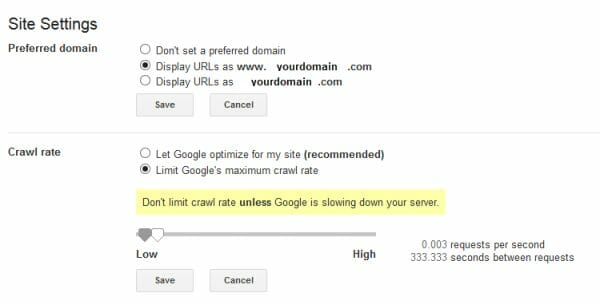

“Try to type in your URL with the non-www URL and see if goes to the www version, then try the opposite,” Khan says. “If both work without any one of them redirecting, you are not properly set up. If so, go to your Google Webmaster Tools. Go to Settings and then Site Settings. See if you have specified a version you prefer. If you’re not sure, get a professional to assist you in determining which version to set up and keep using that going forward.”

Similarly, by default most websites end up with multiple versions of the homepage, reached through various URLs. Having multiple versions of the homepage can cause a lot of duplicate content issues, and it means any link equity the site receives is spread across the different URLs, says Colin Cheng, marketing manager of MintTwist.

You can fix this issue “by choosing one URL that you want as your main URL,” according to Steven Weldler, VP, online marketing for CardCash. “This is entirely a matter of preference but once you choose one, stick with it. All the other URLs should automatically point to the main URL using a 301redirect.”

Use tools like Siteliner and Copyscape to analyze your web content and ensure its originality. If duplicate content pops up, follow either of the two options below:

Mark the URL version of your choice in Google Webmasters. Head to the Settings option on the top right of the page, pick “Site Settings,” and then select the right URL format. Now, when the search engine comes across a site that links to a non-www version of your website, and your preferred option is www, the linking URL is going to be treated as the www one.

If various URLs share the same content, there’s a greater possibility of link sharing, website backlinking, and parameter tracking problems. To prevent these, use the canonical tag. Any bot that comes across this tag will find the link to the actual resource. Each link to the duplicate page gets treated like a link to the original page. Thus, you never lose SEO value from such links.

Add canonical tags by including the following line of code to both the original and duplicate content:

“<link rel=”canonical” href=”https://yoursite.com.com/category/resource”/>”

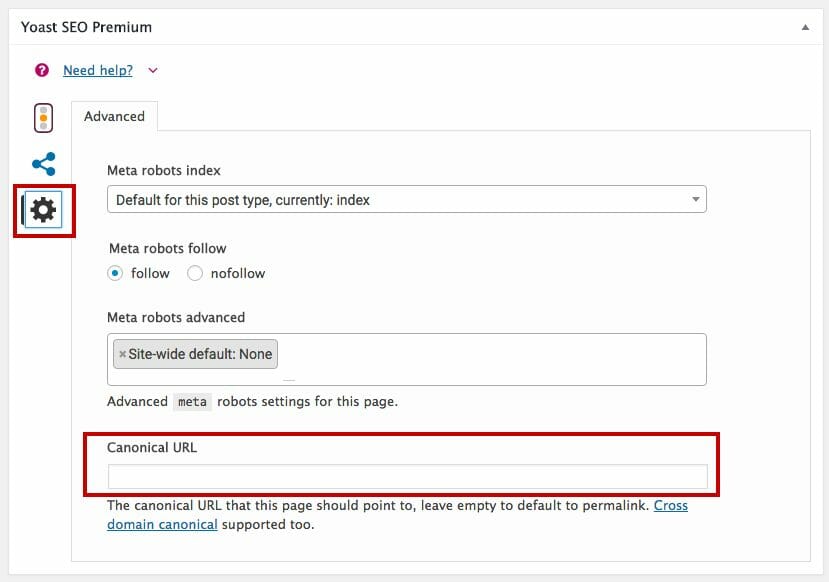

Place the canonical tag in the meta description, which for WordPress is at the bottom of the page under “Yoast SEO Premium”:

6) Missing Alt Tags and Broken Images

What’s Wrong?

Problems with image optimization are common, but unless your website is overly reliant on them, you can save them for later. Missing alt tags and broken images are the two most prevalent problems business owners must fix.

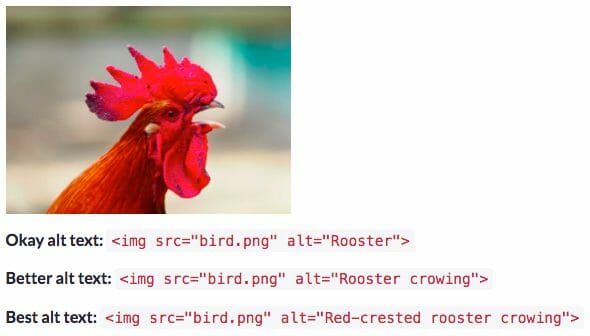

Alt tags are HTML attributes to images, describing their contents. If an image component fails to render properly on your website, the alt tag for the image will describe both the contents and the function. They also reinforce the required keyword by helping search engine crawlers understand the page information.

The Solution:

Implementing these tags is rather simple. Simply locate the image component in your HTML code and add the alt tag to it.

The image source resembles this:

<img src=“image.jpg” alt=“image description” title=“image tooltip”>

Moz provides some great examples of the best alt text:

7) Problems in Title Tags

What’s Wrong?

Title tags can have a variety of issues that affect SEO, including:

- Duplicate tags

- Missing title tags

- Too long or too short title tags

- Etc.

Your title tags, or page title, helps both users and search engines determine what your page is about, making them an (understandably) important part of the optimization process.

To get your title tags just right, you need to start with the basics.

First, your title tag should be 50-60 characters long and include one or two of your target keywords. To avoid technical issues, make sure you don’t have any duplicates on your site.

Not only are title tag issues quite common, but they’re also very diverse. The most prevalent ones are:

- Duplicate title tags

- Overly long or overly short title tags

- Missing title tag

The Solution:

- Compress the title tags of your current web pages because 70-71 characters seems to be the sweet spot as far as display space of new devices (for title tags) is concerned – which is up from 50-60 characters.

- When in doubt, follow this format:

Primary Keyword – Secondary Keyword | Name of Brand - Give a unique title tag to every page; for instance, for an e-store, you can create title tags easily using this formula:

[Item Name] – [Item Category] | [Brand] - Put the keyword at the beginning of the title tag.

8) Messy URLs

What’s Wrong?

URLs that end in something like “..index.php?p=367594” mean nothing to you – or to search bots. They’re not intuitive and they’re not user friendly.

Here’s an illustration that will drive the point home:

The Solution:

Here’s what you can do to fix this all-too common on-site technical SEO issue:

- Add keywords in the URLs

- Use hyphens to separate words, instead of using spaces

- Canonicalize multiple URLs serving the same content

- Try to compress long URLs (100+ characters) into less than 70 characters

- Use a single domain and subdomain

- Use lowercase letters

9) Low Word Count

What’s Wrong?

Though simplicity and brevity are often desirable in marketing, too little text could cause your SEO to suffer.

Google tends to rank content with depth higher, and longer pages often indicate that.

Thin content could be seen as your attempt to bloat the number of web pages on your website, without delivering quality per page.

The Solution:

Thoroughly research a topic to find out all related and relevant info to include in your content.

Use long-tail keywords and keywords in the form of questions as subheadings will enhance the voice-search appeal of your webpage, not to mention giving structure to your lengthy content.

The suggested average word count for a blog post is between 2,250 and 2,500 words.

10) Substandard Mobile Experience

What’s Wrong?

This is big, because Google has begun a soft launch of its mobile-first index as of 2018 (though it will be a slow implementation). The search giant will rank websites based on their mobile versions, so being mobile-ready sounds like an obvious choice. However, not many websites are actually optimized for mobile browsing.

Think about this:

- Mobile phone usage is at an all-time high. Last year, there were over 224 million smartphone users in the U.S. alone.

- Considering that 40% of web visitors will skip your website if it’s not mobile friendly, you need to optimize your site for mobile and you need to optimize it NOW.

The Solution:

- Don’t block JavaScript, images, and CSS, because Google’s search bots look for these elements to correctly categorize the content.

- Avoid Flash content and optimize for cross-device conversions.

- Design the website so that fat-fingered smartphone users can easily tap on buttons and browse comfortably.

- Searches with rich snippets are likely to stand out on mobile devices and attract more clicks, so use schema.org structured data.

- Take the litmus-test. Subject your website to these three mobile-readiness tests, and act on the insights you get:

11) Non-Performing Contact Forms

What’s Wrong?

Is there something wrong with your contact form? Do users not want to fill it in?

Research by Formisimo reveals that only 49% of 1.5 million web users start to fill out a form when they see it. What’s more, out of this 49%, only 16% submit a completed form. So you need to go the extra mile and rectify this problem if you want to collect your customers’ info.

The Solution:

- Try to make the contact form as compelling to users as possible. Make it as short and simple as possible.

- Collect only the information you need, such as name and e-mail address.

- Only if absolutely necessary should you have additional fields, like phone number or job title, because it is recommended that you have no more than five fields in your form.

- Use something interesting for your CTA copy other than the generic “Submit.”

- Experiment with the position, color, copy, and fields of your form.

- Gauge the performance results using A/B testing to see what works for your business and what does not.

12) Problems in Robots.txt

What’s Wrong?

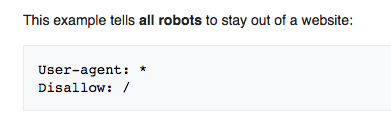

Is your site not getting indexed by the search engines? If yes, then that’s a big problem and the robots.txt file might be to blame. Spiders read this text file to determine whether they are permitted to index the URLs featured on that site. So, robots.txt acts like a rulebook for crawling a website.

How to Check:

To determine if there are issues with the robots.txt file, type your website URL into your browser with a “/robots.txt” suffix. If you get a result that reads “User-agent: * Disallow: /” then you have an issue.

The Solution:

First check your website for robots.txt. Simply type yoursite.com/robots.txt into the Google Search bar. Now, it is true that these files look a bit different for everyone. However, you should watch out for Disallow: /

If you see that, immediately let the web developer know so they can fix the problem. The Disallow line means you’re telling the spiders not the crawl your entire website. Keep in mind that making alterations to the robots.txt file can have major consequences for your website if you’re not familiar with the process. So you should leave this task in the hands of an expert developer.

13) Misconfigured NOINDEX

What’s Wrong?

One of the most prevalent SEO issues faced by business owners is NOINDEX. In fact, the destructive effects of this issue far outweigh those of a misconfigured robot.txt.

When the NOINDEX tag is appropriately configured, it signifies certain pages are of lesser importance to search bots. (For example, blog categories with multiple pages.)

However, when configured incorrectly, NOINDEX can immensely damage your search visibility by removing all pages with a specific configuration from Google’s index. This is a massive SEO issue.

It’s common to NOINDEX large numbers of pages while the website is in development, but once the website goes live, it’s imperative to remove the NOINDEX tag. Do not blindly trust that it was removed, as the results will destroy your site’s search engine visibility.

How to Check:

- Right click on your site’s main pages and select “View Page Source.” Use the “Find” command (Ctrl + F) to search for lines in the source code that read “NOINDEX” or “NOFOLLOW” such as:

- <meta name=”robots” content=”NOINDEX, NOFOLLOW”>

- If you don’t want to spot check, use SEO Audits, KBI.Marketing’s site audit tool, to scan your entire site.

For starters, a misconfigured robots.txt is not going to remove the pages of your site from the Google index, but a NOINDEX directive is capable of wiping out every single one of your indexed pages from the Google Index. NOINDEX is useful for websites in the development phase since the directive prevents them from showing up prematurely in the users’ search results. But for established business websites, it brings nothing but trouble.

The Solution:

The first thing you need to do is locate the problem. While it is easier (and quicker) to use an online tool like Screaming Frog that lets you scan multiple pages at the same time, the recommended method for finding NOINDEX on your website is to conduct a manual check.

Go through each of the codes and if you find NOINDEX, then replace it with INDEX. You may even leave it blank. Once you’ve completed this step, Google will automatically receive the signal to start indexing your web pages once more.

14) Meta Descriptions

What’s Wrong?

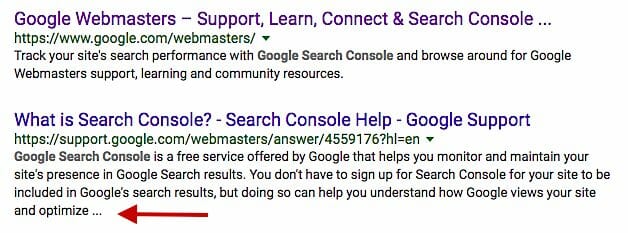

Online users often find some of the text replaced by ellipses (a series of dots) by Google in the results pages, like the second example in the image below. Why? Because the text exceeds the allotted word or pixel count.

When this happens, it’s frustrating for the user who is trying to ascertain whether you site provides the info they’re looking for.

The Solution:

You need to control the pixel length of web page titles and meta descriptions to ensure that search engines do not look for the remaining sentence users don’t click on a different link.

15) Errors in XML Sitemaps

What’s Wrong?

XML Sitemaps alert Google to the business or topic of your website. So a missing or erroneous Sitemap is liable to broadcast false information to Google about your page. Not only do search engines have a difficult time deciphering the contents of the site, but the hierarchy of the website is also not understood. Google Search Console or Bing Webmaster Tools can help you discover this problem.

The Solution:

The landing page of the Sitemaps report provides the list of Sitemaps submitted to Search Console. Click on one of them, drill down for more information (if it’s a Sitemap index). Among other info bits, you will see errors listed.

To sort out the problem, make sure the Sitemap generation and submission plugin on your site is working properly and without any hiccups. That’s why it makes sense to use SEO plugins that are properly integrated and have favorable reviews.

16. Not Enough Use of Structured Data

Google defines structured data as:

… a standardized format for providing information about a page and classifying the page content …

Structured data is a simple way to help Google search crawlers understand the content and data on a page. For example, if your page contains a recipe, an ingredient list would be an ideal type of content to feature in a structured data format.

Address information, like this example from Google, is another type of data perfect for a structured data format:

<script type=”application/ld+json”>

{

“@context”: “https://schema.org”,

“@type”: “Organization”,

“url”: “http://www.example.com”,

“name”: “Unlimited Ball Bearings Corp.”,

“contactPoint”: {

“@type”: “ContactPoint”,

“telephone”: “+1-401-555-1212”,

“contactType”: “Customer service”

}

}

</script>

These structured data can then present themselves on the SERPs in the form of a rich snippet, which gives your SERP listing a visual appeal.

How to Fix It:

As you roll-out new content, identify opportunities to include structured data in the page and coordinate the process between content creators and your SEO team. Better use of structured data may help improve CTR and possibly improve rank position in the SERP. Once you implement structured data, review your GSC report regularly to make sure that Google is not reporting any issue with your structured data markup.

Final Words

So there you have it.

Hopefully you’ve now got a better understanding of the serious on-site technical SEO issues plaguing websites – and their easy fixes. To make sure you’re not losing customers or business growth, take a proactive approach and check each of these issues yourself.

These are the most common issues we have found with client websites in the past few months and we hope this helps you in your own ranking endeavors.

If you’re still having trouble then contact us to find out how we could help you gain results that last and improve your conversion rates in the process.

Buy targeted traffic and increase sales here:

High Quality Targeted Website Traffic

Affiliate Targeted Traffic To Your URL

Targeted eCommerce Traffic To your Store

Organic Traffic / Search Engine Advertising

Email Traffic / Best Solo Ads Provider

Gambling / Casino Targeted Traffic